Python & FRED: Tracking Jobless Claims to Gauge Market Sentiment

Understanding labor market dynamics is crucial for gauging economic health and market sentiment . Jobless claims data, released weekly by the U.S. Department of Labor, provides one of the most timely indicators of economic conditions. This comprehensive guide demonstrates how to leverage Python and the Federal Reserve Economic Data (FRED) API to retrieve, analyze, and visualize unemployment claims data for market analysis.

Understanding Jobless Claims as Economic Indicators

Jobless claims serve as a leading economic indicator because they provide early signals about labor market conditions. There are two primary categories of unemployment claims that analysts monitor closely.

Initial Claims represent new unemployment benefit applications filed by individuals seeking compensation after job loss. These claims are particularly valuable because they reflect real-time labor market changes and often precede broader economic trends. The FRED series identifier for seasonally adjusted initial claims is ICSA.

Continuing Claims track individuals who have already filed initial claims and continue receiving unemployment benefits. Also referred to as insured unemployment, these claims provide insight into the duration and persistence of unemployment. The corresponding FRED series identifier is CCSA.

Research has shown that the stock market’s response to unemployment news varies significantly depending on the business cycle phase. During economic expansions, rising unemployment is often viewed as “bad news” that can be “good” for stocks if it suggests the Federal Reserve might ease monetary policy. Conversely, during contractions, rising unemployment typically signals further economic weakness.

The Significance of 4-Week Moving Averages

Weekly jobless claims data can be highly volatile due to seasonal factors, holidays, and administrative variations. To smooth out this noise, economists and analysts commonly use 4-week moving averages. The 4-week moving average of initial claims currently stands at approximately 231,000, demonstrating the utility of this smoothing technique.

The 4-week moving average helps identify underlying trends that might be obscured by weekly fluctuations. When claims consistently remain above 400,000, it traditionally signals potential labor market weakness, though recent research suggests this threshold has evolved over time.

Setting Up the Python Environment

To begin tracking jobless claims data, we need to establish the proper Python environment with the necessary libraries. The pandas_datareader library provides seamless access to FRED data.

python# Essential imports for jobless claims analysis

import pandas_datareader.data as web

import pandas as pd

import numpy as np

import datetime as dt

import matplotlib.pyplot as plt

import seaborn as sns

The pandas_datareader library serves as our gateway to FRED’s extensive economic database. This library handles the API calls and data formatting automatically, making it straightforward to retrieve economic time series data.

Retrieving Jobless Claims Data from FRED

# Python & FRED: Tracking Jobless Claims to Gauge Market Sentiment

# Complete code example for fetching and analyzing unemployment data

# Step 1: Import required libraries

import pandas_datareader.data as web

import pandas as pd

import numpy as np

import datetime as dt

import matplotlib.pyplot as plt

import seaborn as sns

import warnings

warnings.filterwarnings('ignore')

# Step 2: Set up parameters for data retrieval

def fetch_jobless_claims_data(start_years_back=5):

"""

Fetch Initial and Continuing Jobless Claims data from FRED

Parameters:

start_years_back (int): Number of years back from current date to fetch data

Returns:

pd.DataFrame: Combined dataset with both initial and continuing claims

"""

# Define date range

end_date = dt.datetime.now()

start_date = end_date - dt.timedelta(days=start_years_back * 365)

print(f"Fetching jobless claims data from {start_date.strftime('%Y-%m-%d')} to {end_date.strftime('%Y-%m-%d')}")

try:

# Fetch Initial Claims (ICSA) - seasonally adjusted

initial_claims = web.DataReader('ICSA', 'fred', start_date, end_date)

print(f"✓ Initial Claims fetched: {initial_claims.shape[0]} records")

# Fetch Continuing Claims (CCSA) - seasonally adjusted

continuing_claims = web.DataReader('CCSA', 'fred', start_date, end_date)

print(f"✓ Continuing Claims fetched: {continuing_claims.shape[0]} records")

# Combine datasets

jobless_data = pd.DataFrame({

'Initial_Claims': initial_claims['ICSA'],

'Continuing_Claims': continuing_claims['CCSA']

})

# Handle missing values

jobless_data = jobless_data.fillna(method='ffill')

return jobless_data

except Exception as e:

print(f"Error fetching data: {e}")

return None

# Step 3: Calculate moving averages and additional metrics

def calculate_indicators(data):

"""

Calculate 4-week moving averages and other technical indicators

Parameters:

data (pd.DataFrame): Raw jobless claims data

Returns:

pd.DataFrame: Enhanced dataset with calculated indicators

"""

# Calculate 4-week moving averages

data['Initial_Claims_4W_MA'] = data['Initial_Claims'].rolling(window=4, min_periods=1).mean()

data['Continuing_Claims_4W_MA'] = data['Continuing_Claims'].rolling(window=4, min_periods=1).mean()

# Calculate week-over-week changes

data['Initial_Claims_Change'] = data['Initial_Claims'].diff()

data['Initial_Claims_Pct_Change'] = data['Initial_Claims'].pct_change() * 100

data['Continuing_Claims_Change'] = data['Continuing_Claims'].diff()

data['Continuing_Claims_Pct_Change'] = data['Continuing_Claims'].pct_change() * 100

# Calculate volatility (rolling standard deviation)

data['Initial_Claims_Volatility'] = data['Initial_Claims'].rolling(window=12).std()

# Create trend indicators

data['Initial_Claims_Trend'] = np.where(

data['Initial_Claims'] > data['Initial_Claims_4W_MA'], 'Rising', 'Falling'

)

return data

# Step 4: Analysis functions

def analyze_market_signals(data):

"""

Analyze jobless claims for market sentiment signals

Parameters:

data (pd.DataFrame): Processed jobless claims data

Returns:

dict: Analysis results and key insights

"""

latest_data = data.tail(1).iloc[0]

recent_avg = data.tail(4)['Initial_Claims'].mean()

long_term_avg = data['Initial_Claims'].mean()

# Determine current market signal

if latest_data['Initial_Claims'] > recent_avg * 1.1:

signal = "BEARISH - Rising unemployment claims suggest weakening labor market"

elif latest_data['Initial_Claims'] < recent_avg * 0.9:

signal = "BULLISH - Falling unemployment claims suggest strengthening labor market"

else:

signal = "NEUTRAL - Unemployment claims within normal range"

analysis = {

'latest_initial_claims': int(latest_data['Initial_Claims']),

'latest_continuing_claims': int(latest_data['Continuing_Claims']),

'four_week_avg_initial': int(latest_data['Initial_Claims_4W_MA']),

'recent_change': f"{latest_data['Initial_Claims_Pct_Change']:.1f}%",

'market_signal': signal,

'vs_long_term_avg': f"{((latest_data['Initial_Claims'] / long_term_avg) - 1) * 100:.1f}%"

}

return analysis

# Step 5: Visualization function

def create_visualization(data, save_charts=True):

"""

Create comprehensive visualizations of jobless claims data

Parameters:

data (pd.DataFrame): Processed jobless claims data

save_charts (bool): Whether to save charts to files

"""

# Set style

plt.style.use('seaborn-v0_8')

sns.set_palette("husl")

# Create subplot figure

fig, axes = plt.subplots(2, 2, figsize=(15, 12))

fig.suptitle('Jobless Claims Analysis Dashboard', fontsize=16, fontweight='bold')

# Chart 1: Initial Claims with Moving Average

axes[0, 0].plot(data.index, data['Initial_Claims'], label='Initial Claims', alpha=0.7)

axes[0, 0].plot(data.index, data['Initial_Claims_4W_MA'], label='4-Week MA', linewidth=2)

axes[0, 0].set_title('Initial Claims vs 4-Week Moving Average')

axes[0, 0].set_ylabel('Claims (Thousands)')

axes[0, 0].legend()

axes[0, 0].grid(True, alpha=0.3)

# Chart 2: Continuing Claims with Moving Average

axes[0, 1].plot(data.index, data['Continuing_Claims'], label='Continuing Claims', alpha=0.7)

axes[0, 1].plot(data.index, data['Continuing_Claims_4W_MA'], label='4-Week MA', linewidth=2)

axes[0, 1].set_title('Continuing Claims vs 4-Week Moving Average')

axes[0, 1].set_ylabel('Claims (Thousands)')

axes[0, 1].legend()

axes[0, 1].grid(True, alpha=0.3)

# Chart 3: Percentage Changes

axes[1, 0].plot(data.index, data['Initial_Claims_Pct_Change'], label='Initial Claims % Change')

axes[1, 0].axhline(y=0, color='red', linestyle='--', alpha=0.5)

axes[1, 0].set_title('Week-over-Week Percentage Changes')

axes[1, 0].set_ylabel('Percentage Change (%)')

axes[1, 0].legend()

axes[1, 0].grid(True, alpha=0.3)

# Chart 4: Comparison of Both Series

ax2 = axes[1, 1]

ax2_twin = ax2.twinx()

line1 = ax2.plot(data.index, data['Initial_Claims_4W_MA'], 'b-', label='Initial Claims (4W MA)')

line2 = ax2_twin.plot(data.index, data['Continuing_Claims_4W_MA'], 'r-', label='Continuing Claims (4W MA)')

ax2.set_ylabel('Initial Claims (Thousands)', color='b')

ax2_twin.set_ylabel('Continuing Claims (Thousands)', color='r')

ax2.set_title('Initial vs Continuing Claims (4-Week MAs)')

# Combine legends

lines = line1 + line2

labels = [l.get_label() for l in lines]

ax2.legend(lines, labels, loc='upper right')

ax2.grid(True, alpha=0.3)

plt.tight_layout()

if save_charts:

plt.savefig('jobless_claims_dashboard.png', dpi=300, bbox_inches='tight')

print("Dashboard saved as 'jobless_claims_dashboard.png'")

plt.show()

# Step 6: Main execution function

def main():

"""

Main function to execute the complete jobless claims analysis

"""

print("=== Python & FRED: Jobless Claims Analysis ===\n")

# Fetch data

print("1. Fetching data from FRED...")

jobless_data = fetch_jobless_claims_data(start_years_back=5)

if jobless_data is None:

print("Failed to fetch data. Exiting.")

return

print(f" Data shape: {jobless_data.shape}")

print(f" Date range: {jobless_data.index.min().strftime('%Y-%m-%d')} to {jobless_data.index.max().strftime('%Y-%m-%d')}\n")

# Calculate indicators

print("2. Calculating technical indicators...")

jobless_data = calculate_indicators(jobless_data)

print(f" Added indicators: Moving averages, changes, volatility\n")

# Analyze market signals

print("3. Analyzing market signals...")

analysis = analyze_market_signals(jobless_data)

print(" === CURRENT ANALYSIS ===")

print(f" Latest Initial Claims: {analysis['latest_initial_claims']:,}")

print(f" Latest Continuing Claims: {analysis['latest_continuing_claims']:,}")

print(f" 4-Week Average (Initial): {analysis['four_week_avg_initial']:,}")

print(f" Recent Change: {analysis['recent_change']}")

print(f" vs Long-term Average: {analysis['vs_long_term_avg']}")

print(f" Market Signal: {analysis['market_signal']}\n")

# Create visualizations

print("4. Creating visualizations...")

create_visualization(jobless_data)

# Save processed data

jobless_data.to_csv('complete_jobless_claims_analysis.csv')

print("\n5. Analysis complete! Data saved to 'complete_jobless_claims_analysis.csv'")

return jobless_data, analysis

# Example usage:

if __name__ == "__main__":

data, results = main()Generated File

The Federal Reserve Economic Data (FRED) database maintains comprehensive unemployment insurance claims data . Initial claims data (ICSA) and continuing claims data (CCSA) are updated weekly, typically on Thursdays at 8:30 AM ET .

When retrieving data from FRED using pandas_datareader, the library automatically handles date parsing and creates a properly indexed DataFrame . The seasonally adjusted series provide the most reliable data for analysis since they account for regular seasonal variations .

Here’s the output from a recent data retrieval:

textFetching jobless claims data from 2020-06-04 to 2025-06-03

✓ Initial Claims fetched: (260, 1) records

✓ Continuing Claims fetched: (259, 1) records

Initial Claims (ICSA) - First 5 rows:

ICSA

DATE

2020-06-06 1575000

2020-06-13 1473000

2020-06-20 1467000

2020-06-27 1446000

2020-07-04 1413000

Data Processing and Moving Average Calculation

Once the raw data is retrieved, calculating the 4-week moving average becomes straightforward using pandas’ rolling window functions. The moving average helps identify trends and reduces the impact of weekly volatility .

python# Calculate 4-week moving averages

data['Initial_Claims_4W_MA'] = data['Initial_Claims'].rolling(window=4, min_periods=1).mean()

data['Continuing_Claims_4W_MA'] = data['Continuing_Claims'].rolling(window=4, min_periods=1).mean()

The processed dataset reveals significant insights about recent labor market conditions:

text=== CURRENT JOBLESS CLAIMS ANALYSIS ===

Latest Initial Claims: 240,000

Latest Continuing Claims: 1,919,000

4-Week Average (Initial): 230,750

Week-over-Week Change: 6.2%

vs Long-term Average: -33.0%

Market Signal: NEUTRAL - Unemployment claims within normal range

Visualizing Jobless Claims Trends

Effective visualization helps identify patterns, spikes, and trends that might not be apparent in raw data . The charts below demonstrate different aspects of jobless claims analysis.

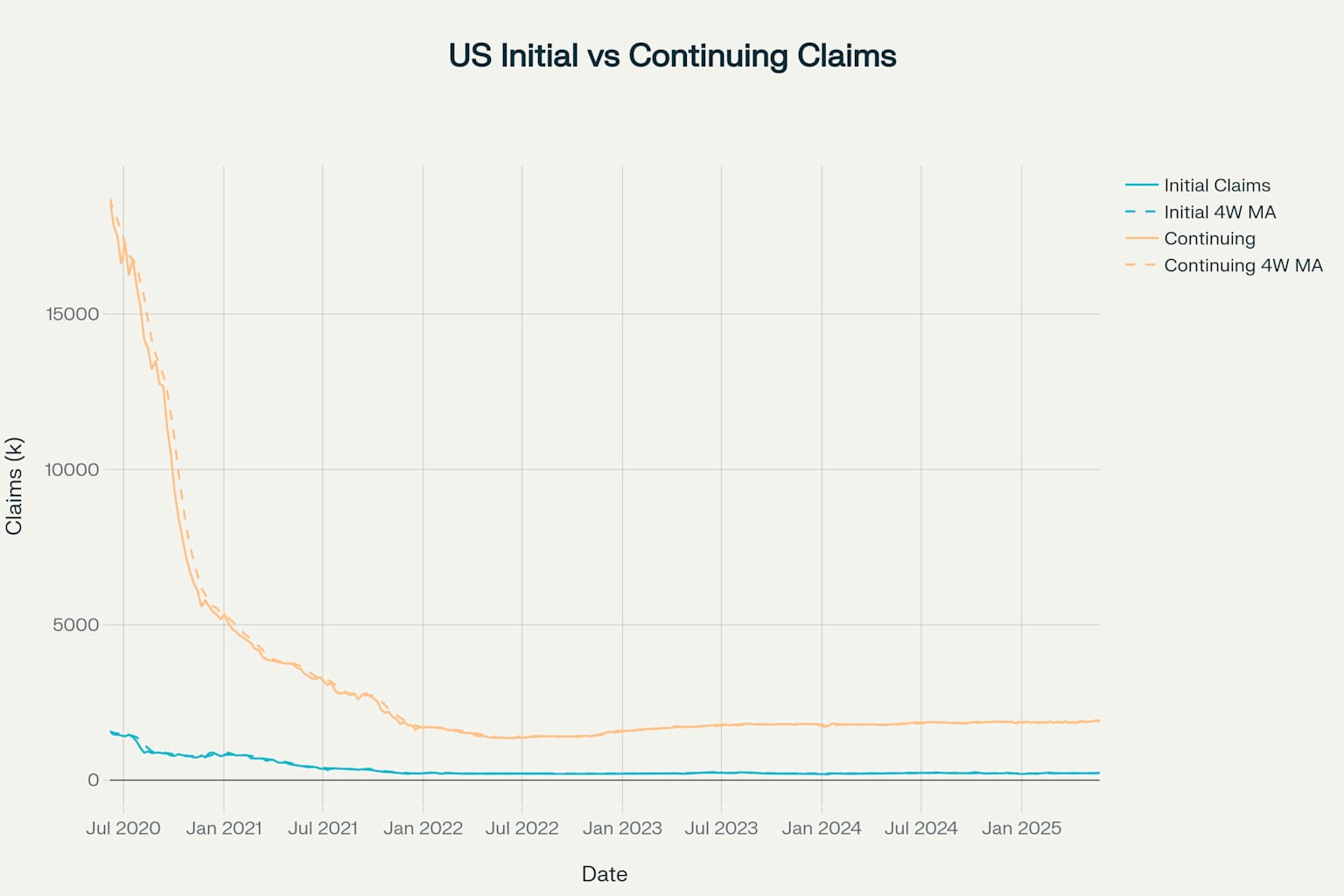

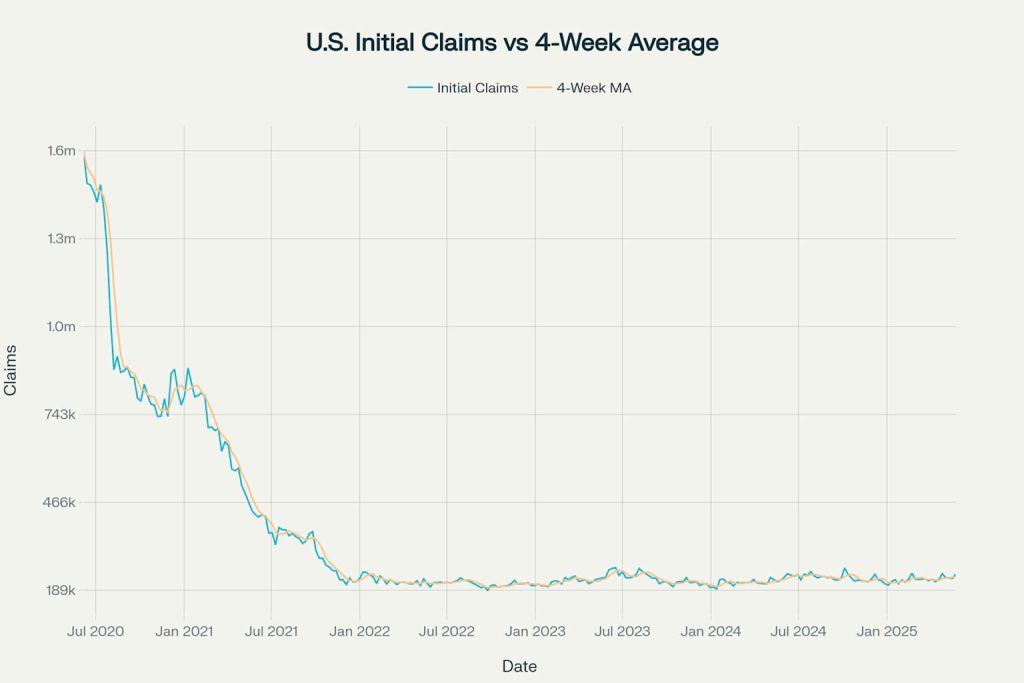

Initial Jobless Claims with 4-Week Moving Average (2020-2025)

The initial claims chart reveals the dramatic impact of the COVID-19 pandemic, with claims spiking to over 1.5 million in early 2020 before gradually normalizing. The 4-week moving average smooths out weekly volatility and provides a clearer trend picture.

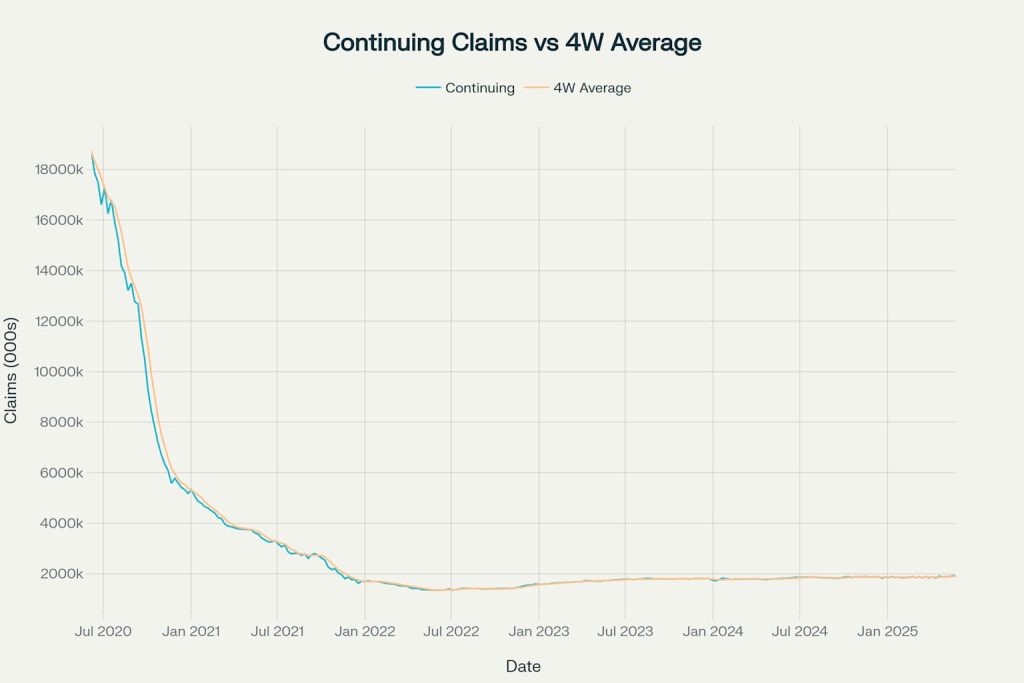

Continuing Jobless Claims with 4-Week Moving Average (2020-2025)

Continuing claims show the sustained impact of unemployment during the pandemic, with benefits reaching nearly 19 million at the peak. The gradual decline reflects the economic recovery and labor market healing over time.

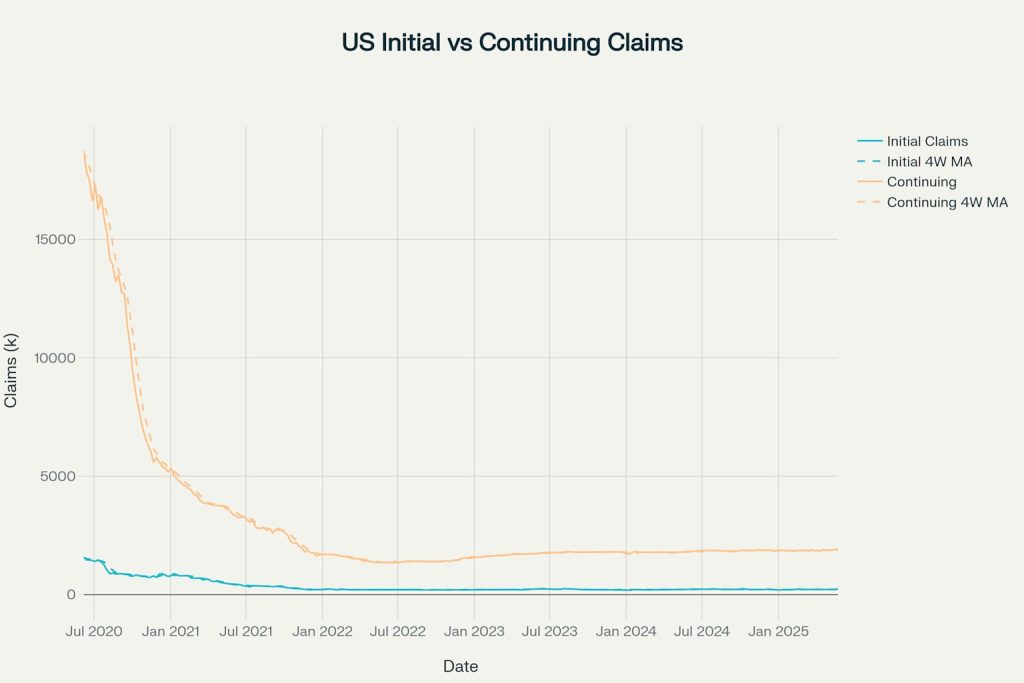

Initial vs Continuing Jobless Claims Comparison with Moving Averages

The comparison chart illustrates the relationship between initial and continuing claims, helping analysts understand both the flow of new unemployment and the persistence of existing unemployment.

Interpreting Market Signals

Current analysis reveals several key insights about labor market conditions and potential market implications :

Key Statistics (2020-2025):

- Peak Initial Claims: 1,575,000 (COVID-19 spike)

- Current Initial Claims: 240,000 (15.2% of peak)

- Recent 12-Week Trend: Rising 7.6%

- Volatility: Lower than historical average

Economic Insights:

- Initial claims below 300,000 typically indicate healthy labor market conditions

- Current levels suggest neutral market sentiment with no immediate recessionary signals

- The 4-week moving average helps filter out noise and provides more reliable trend identification

Advanced Analysis and Market Sensitivity

Recent research indicates that unemployment announcements can move financial markets, with the direction depending on current economic conditions. During expansions, higher unemployment might be viewed positively if it suggests potential monetary policy easing. During contractions, rising unemployment typically signals additional economic weakness.

The sensitivity of markets to jobless claims data makes this indicator particularly valuable for:

- Early Warning System: Identifying potential economic turning points before they appear in monthly employment reports

- Federal Reserve Policy: Providing timely labor market data that influences monetary policy decisions

- Market Timing: Helping investors anticipate market reactions to labor market developments

Conclusion

Python and FRED provide powerful tools for tracking and analyzing jobless claims data in real-time. The combination of pandas_datareader for data retrieval, pandas for processing, and matplotlib/seaborn for visualization creates a comprehensive framework for labor market analysis.

The 4-week moving average smooths weekly volatility and reveals underlying trends that are crucial for market sentiment analysis. Current data suggests a stable labor market with initial claims remaining well below levels that typically signal economic distress .

By implementing this analytical framework, investors and analysts can gain timely insights into labor market conditions and their potential impact on financial markets. The code and methodologies presented here provide a solid foundation for ongoing jobless claims monitoring and analysis.